The promises of chance

10.08.2018Chance does not have habits. So, it counteracts any deterministic law where knowledge is used to predict an effect from a cause. Irregular, chance seems driven by whims. However, it is not unpredictable, and this is the function of probabilities and other stochastic calculations.

Everyday language never quantifies the uncertain. Adjectives evaluate it vaguely, from the impossible to the certain going through improbably, doubtful and plausible. There is no scale for what we cannot predict but a small band of synonyms that are equally vague: fortuitous, possible, random, uncertain, accidental, unforeseen, unexpected, incidental, etc. Yet, into the gap of this equality, are thrown irrational beliefs, from optimistic to pessimistic, from faith in luck to the certainty of being cursed and other claims, the rationality for which is hidden in its proof, such as divine justice, the law of karma and then finally superstitions and all sorts of conciliatory prayers and rites to bring “Fortune”, this deity for which we barely know what favours its rewards…

Because, when we check that we’re having a coffee together tomorrow, we talk about our intention, but it’s a long way from this to the coffee! Between the firm intention and the steaming coffee is a random component. And probably nothing happens without a quotient of uncertainty. “Nothing is ever certain” we whisper lowering our head, “if God wishes it”, we think raising our eyes to the sky. Individually and collectively, we do not really appreciate how reassuring this is!

But this does not include the mathematical assessment of reality … Probability is the numerical magnitude which quantifies the randomness of an event using a percentage. The theory of probability and statistics are branches of mathematics studying the phenomena characterised by chance and uncertainty. “Nothing is more glorious than to be able to give rules to things, which, being dependent on chance, seem to know none, and thereby escape human reason”, wrote Christiaan Huygens (1629-1695).

This is an old story, that can only be told by those who understand it. So, we will say as little as possible about it. The origin of calculating probabilities for a problem is attributed to the discussions between Blaise Pascal (1623-1662) and Pierre de Fermat (?-1665). Then philosophers, mathematicians, naturalists, botanists, chemists and physicists… all stuck to it, each in their field: the assessment of hazards is central to knowledge, which by itself is used to build a predictability.

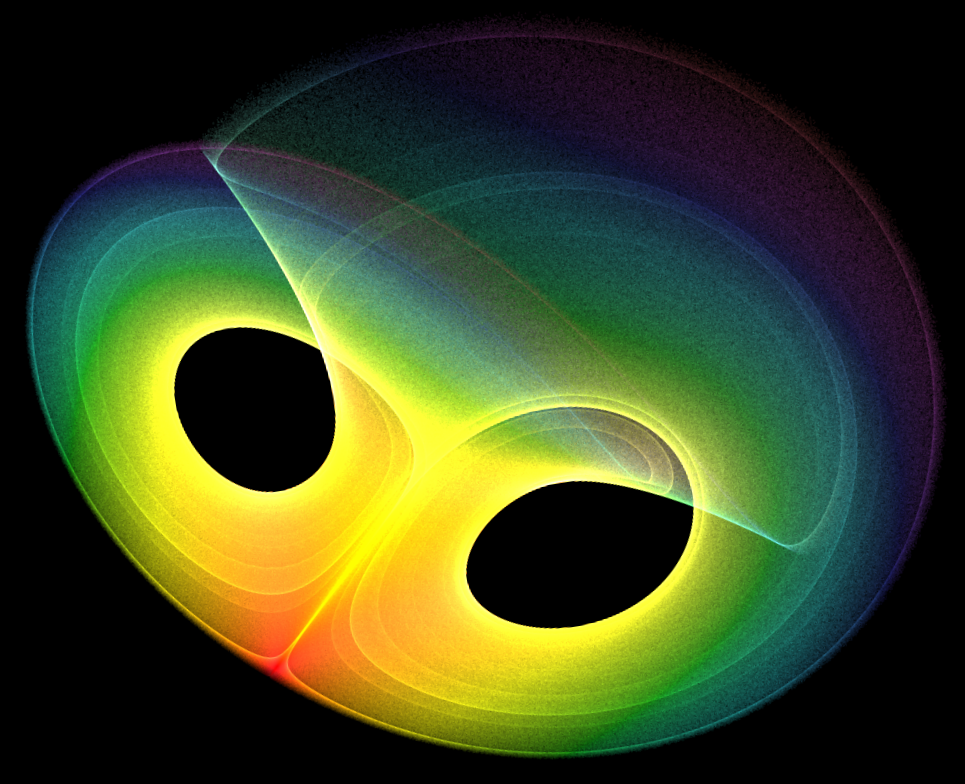

The mathematical description of the random movement proposed by the botanist Robert Brown (1773-1858), seems to have been a touchstone in this search: this Brownian movement would be studied by the greatest scholars, including Albert Einstein (1879-1955) and Paul Langevin (1872-1946). The names A. A. Markov (1856-1922), Kyoshi Itõ (1915-2008) and Norbert Wiener (1894-1964), the founder of cybernetics, the theory of information and organisation, are often quoted among the mathematicians that stand out as the source of stochastic calculation which studies random phenomena in time. This stochastic calculation is given as a specific extension to the theory of probabilities, the first mathematical modelling of which is attributed to N. Kolmogorov (1903-1987), and which has constantly developed and become more complex through experiments in other fields of research, starting with that of quantum mechanics, which studies the physical phenomena of particle behaviour on the atomic and subatomic scale, particularly with the equation by the physicist Erwin Schrödinger (1887-1961). Such big names would not be so famous without their peers: it is a vast network of human intelligence which works, questions, discovers, disproves, proves, challenges and rebuilds new foundations, by passing on the baton. They are all the great living adventure of the search for rational and experimental knowledge. However, this knowledge must understand and predict chance.

This rough overview of the history of the modelling of chance must include the famous “Turing machine”, the conceptual tool designed by Allan Turing (1912-1954) together with the mathematicians John Von Neumann (1903-1957) and Claude Shannon (1916-2001) in 1936. With the aforementioned Norbert Wiener, these extraordinary minds were the first to consider and lay the foundations for modelling the relationship between mathematics, technology and information. They were at the forefront of the invention of computing and Shannon invented the bit, the binary element which is the unit of measurement for computer information and still in use today. The notion of information quantity is based on probability, where information is the measurement of an uncertainty calculated from the probability of an event.

Along with quantum mechanics, the fields of application of probabilities, including stochastic calculations, extend to chemistry, meteorology, financial mathematics and image processing. These fields overlap with statistical mathematics and algorithmic data processing, from natural sciences (physics, biology, etc.) to life and cultural sciences such as medicine, economy, environment, demographics, sociology, political sciences, etc. No theoretical or practical field can do without it. In the era of Artificial Intelligence, including “Big Data”, collecting, storing and analytical processing of digital data of a volume previously unknown in the history of humankind, it seems that all the fields of theoretical and applied knowledge are working to reduce randomness and unpredictability. The law of large numbers and chaos theory reduce chance to probability laws allowing more rigorous projections of the future and the actions of everyone. Behavioural whims can be “altogether” or quantitatively modelled…

This principle of uncertainty calculated in the sciences is gradually becoming an ideal for regulating human activities, particularly when these are numerically traceable and represent so much data and information to be processed, correlated and measured. Knowing, calculating, understanding, speculating, channelling then controlling, everyone’s fate has its quotient of opportunities to comply with the common rules of populations understood in masses. Can we predict the snowflake that decides the avalanche? If this is possible and we remove it, can we predict and neutralise all the threatening snowflakes in order to prevent this avalanche? (dialogue between J. von Neumann and R. Wiener). In other words, could the understanding of societies be reduced to meteorological science? Some people hope so and are working on it. However, no one seems to know the date and time of the next stock market crash.

If we learn to predict chance, chance will always be what we had not expected. The paradox would be to want to eradicate chance, etymologically associated with gambling and risk, when it seems that all the laws of the universe and even causality itself, are derived from its creative and dynamic principle.